“My father killed himself when I was 13 years old.” That was the opening line of my medical school admission essay, the one in which I tried to convince an anonymous administrator in another state that, as a suicide survivor, I was specially possessed of both strength and empathy—and that these traits made me specially worthy of becoming a doctor. In the essay I reminisced about the fun times with my dad, like when he would paint and I would sit on the floor poring over his medical textbooks. I described the medical diagrams he sketched on the backs of napkins at the KFC, when he took me out to dinner on our mandated Thursdays and every other weekend. My parents were divorced by then, and these were the only times I got to see my Dad. I treasured them. I didn’t tell the whole story in that essay, though. I didn’t mention how, a week before his death, my dad showed me where his will was located. “Just in case something happens to me,” he said. I also left out the night that he attempted suicide the first time. He called my mom to tell her he’d taken pills. She got the police to trace the call to a hotel. They found him, rushed him to the hospital, and pumped his stomach. He lived two more days, until he hanged himself. I didn’t tell those stories. I was focusing on the positive, you see. I wanted to get in. The real story was too messy and painful for the confines of a university admission essay.

In my first year of medical school we learned about the warning signs of suicidality, and how to intervene by asking “are you thinking of hurting yourself?” and “do you have a plan?” That was when I realized that his showing me the will and first attempts were our chances to intervene. In medical school I learned about how to contract for safety and, maybe, save a life. I learned that suicide is preventable and that public safety measures—restricted access to guns, the installation of barriers on tall buildings and bridges—has not only thwarted individuals, but has also lowered the overall suicide rate in entire regions after they have been implemented. I learned that when patients survive a suicide attempt they are unlikely to try again and die by suicide. Learning about how to prevent suicide made me feel empowered, but it also made me feel guilty, because I didn’t know these things when I was 13, and I wish I had.

I see the bodies of the suicidal on my autopsy table every week: the hangings, the incised wounds and gunshot wounds to the head and chest. Family members, survivors, are often in denial. The manner of death “just doesn’t make sense.” Sometimes I will confide that I am a survivor too, and that, even after 35 years, my father’s death still doesn’t make sense to me, either. I’ve been criticized for writing that suicide is a “selfish act”—but I stand by those words. Not out of a sense of abandonment from my dad, but because I too have lived long enough to be suicidal myself. In those moments, when suicide “made sense,” I was so engrossed in my own pain that the tunnel vision of depression made everything else—and everyone else—irrelevant. In that way the suicidal person is selfish; as in “self focused,” unwilling to believe that their pain can fade over time, and that other people rely on their presence in this world. Having coming through those periods myself with the unwavering love and support of my mother and, later, my husband, has made me stronger and more empathetic. I have overcome my father’s suicide, even if the wound it left me has never fully healed.

I have learned that it helps to talk openly about it. The more I speak out about my father’s death, the more I discover friends who have also been touched by suicide. Silence = death when it comes to suicide, just like with HIV/AIDS. By talking openly about suicide, we can overcome the stigma associated with mental illness, and signal to those who may be suffering and suicidal (even when they aren’t mentally ill, but just going through a difficult time) that it’s okay to reach out for help. Others like me have been through it. We’ve made it to the other side. It may be hard to imagine—but it gets better.

If you are thinking of hurting yourself or you know someone who needs help call the National Suicide Prevention Hotline 1-800-273-8255.

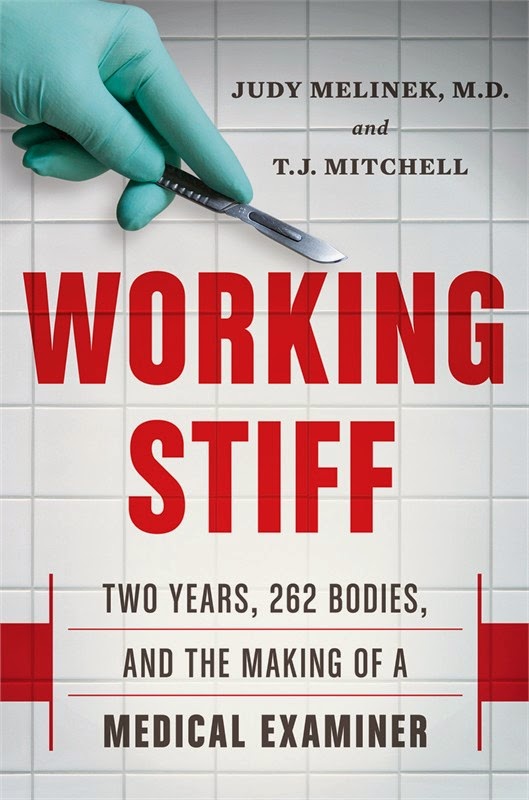

Bio: Dr. Judy Melinek is a forensic pathologist in San Francisco, California, and the CEO of PathologyExpert Inc. She is the co-author with her husband, writer T.J. Mitchell, of the New York Times bestselling memoir Working Stiff: Two Years, 262 Bodies, and the Making of a Medical Examiner. They are currently writing a forensic fiction series entitled First Cut. You can follow her on Twitter @drjudymelinek and Facebook/DrJudyMelinekMD.